The commonly-used square Fried geometry[2] sensing in most Shack-Hartmann-based adaptive optics (AO) systems is insensitive to a checkerboard-like pattern of phase error called waffle. This zero mean slope phase error is low over one set of WFS sub-apertures arranged as the black squares of a checkerboard, and high over the white squares.

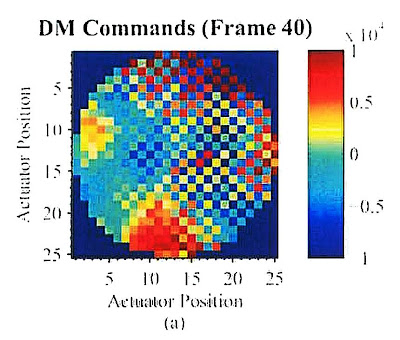

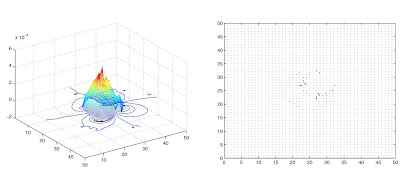

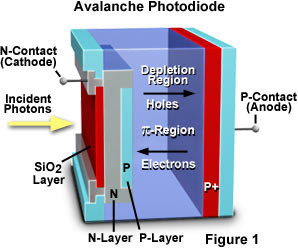

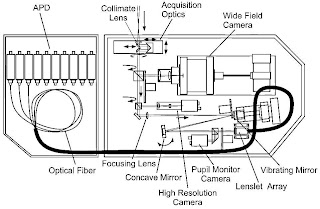

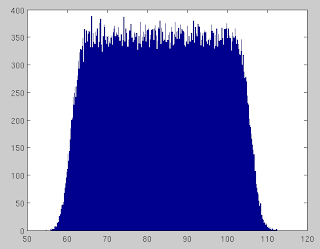

Waffle mode is essentially a repeated astigmatic pattern at the period of the SH sampling over the entire aperture. This wavefront has zero average slope over every sub-aperture and hence produces zero SH WFS output. The illustration of the waffle pattern on the DM is in Fig. 1.

Figure 1: Waffle mode on the DM [the picture from[3]]

Efforts have been made to understand how to control this and other ``blind'' reconstructor modes[1,4,5]. Advances in wave front reconstruction approaches have improved AO system performance considerably. Many physical processes cause the waffle: misregistration, high noise levels on the photosensor of WFS and so on.

Atmospheric turbulence and the Waffle modes

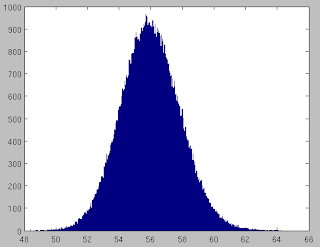

The initial uncertainty of atmospheric phase[1] is given by Kolmogorov statistics[6,7]. The atmosphere is unlikely to contain high spatial frequency components relative to low spatial frequency ones. Atmospheric turbulence has the form of a fractal and has much more power at large spatial scales so that the idea that the atmospheric wavefront can have big swings between adjacent phase points is physically unrealistic. This acts fortuitously to suppress waffle behaviour, which is a high spatial frequency behaviour.

Waffle modes during the Wavefront Sensing

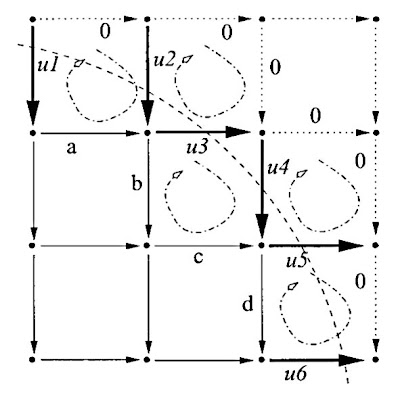

The first step is to define the phase differences in terms of the phase measurements. Most gradient and curvature wavefront sensors do not sense all possible modes. For instance, the Fried geometry cannot measure the so-called Waffle mode. The Waffle mode occurs when the actuators are moved in a checkerboard pattern: all white squares change position while the black squares remain stationary. This pattern can build up over time, it is reduced by using different actuator geometries or filtering the control signals so as suppress this mode.The curvature WFS, is not sensitive to waffle mode error, although it has its own particular null space. Curvature sensors are typically not used for AO systems with more sensing channels because of their noise propagation properties[8].

We stress here that waffle mode error does not depend fundamentally on the actuator location and geometry: it only depends on the sensor geometry[9].

Waffle modes in Deformable Mirrors

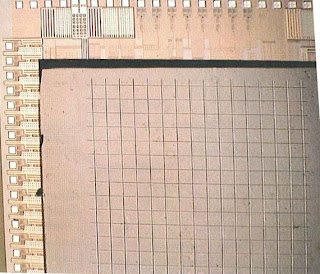

The best DMs that we make use a quasi-hex geometry, which reduces waffle pattern noise. In the continuous-facesheet mirrors, the actuators push and pull on the surface. Since the surface in continuous, there is some mechanical crosstalk from one actuator to the next one - this is so-called influence function. But that can be controlled through software within the control computer. Because most DM have regular arrays of actuators, either square or hexagonal geometries, the alternate pushing and pulling of adjacent actuators can impart a patterned surface that resembles a waffle. The ``waffle mode'' can appear in images of regular patter, which acts an an unwanted diffraction grating[10].

Removing the waffle modes

There is a weighting approach proposed by Gavel[1] that will actually change the mode space structure. We introduce an actuator weighting, but instead of using a scalar regularisation parameter we use a positive-definite matrix. An a-priori statistical information concerning the quantities to be determined and the measurement noise can be used to set the weighting matrices in the cost function terms.The technique penalises all local waffle behaviour using the weighting matrix. It was found[1] that only a modification of the cost function by including a penalty term on the actuators will actually modify the mode space. With a suitable weighting matrix on the actuators, waffle behaviour can be sorted out into modes that have the least influence in the reconstruction matrix, thus suppressing waffle in the wavefront solutions.

The problem is that when the waffle modes go unsensed, they are not corrected by the DM and can accumulate within the closed-loop operation of the system. The accumulation of waffle modes leads to serious performance problems. The simplest way to correct them is that the adaptive-optics operator opens the feedback loop of the system in order to clear the DM commands and alleviate the waffle.

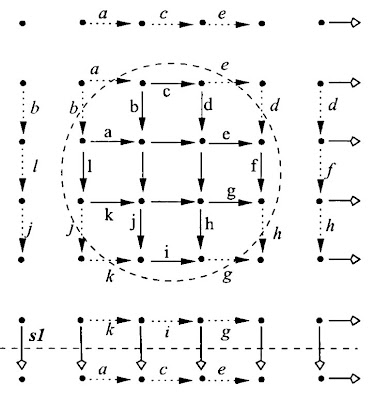

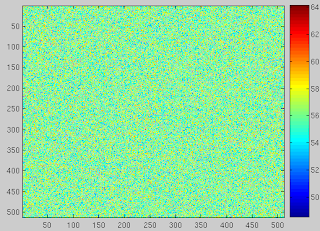

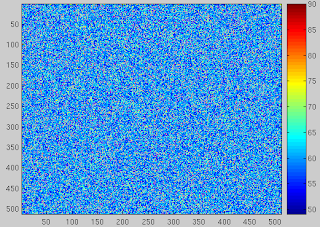

The waffle pattern accumulates in a closed-loop feedback system because the sensor cannot sense the mode for compensation. Spatially filtering the DM commands gives the option to maintain a conventional reconstructor while still having the ability to mitigate the waffle mode when it occurs[3]. In those experiments authors used a leaky integrator.

The paper[3] analyses a technique of sensing the waffle mode in the deformable mirror commands and applying a spatial filter to those commands in order to mitigate for the waffle mode. Directly spatially filtering the deformable mirror commands gives the benefit of maintaining the reconstruction of high frequency phase of interest while having the ability to alleviate for the waffle-like pattern when it arises[3].

This paper[3] will present a technique that only alleviates for the waffle when it is detected and does not effect the reconstruction of the phase when the mode has not accumulated to a degree that causes degradation in the performance of the system.

Bibliography

- 1

- D.T. Gavel.

Suppressing anomalous localized waffle behavior in least-squares wavefront reconstructors.

4839:972, 2003. - 2

- D.L. Fried.

Least-square fitting a wave-front distortion estimate to an array of phase-difference measurements.

JOSA, 67(3):370-375, 1977. - 3

- K.P. Vitayaudom, D.J. Sanchez, D.W. Oesch, P.R. Kelly, C.M. Tewksbury-Christle, and J.C. Smith.

Dynamic spatial filtering of deformable mirror commands for mitigation of the waffle mode.

In SPIE Optics & Photonics Conference, Advanced Wavefront Control: Methods, Devices, and Applications VII, Vol. 7466: 746609, 2009. - 4

- L.A. Poyneer.

Advanced techniques for Fourier transform wavefront reconstruction.

4839:1023, 2003. - 5

- R.B. Makidon, A. Sivaramakrishnan, L.C. Roberts Jr, B.R. Oppenheimer, and J.R. Graham.

Waffle mode error in the AEOS adaptive optics point-spread function.

4860:315, 2003. - 6

- A.N. Kolmogorov.

Dissipation of energy in the locally isotropic turbulence.

Proceedings: Mathematical and Physical Sciences, 434(1890):15-17, 1991. - 7

- A.N. Kolmogorov.

The local structure of turbulence in incompressible viscous fluid for very large Reynolds numbers.

Proceedings: Mathematical and Physical Sciences, 434(1890):9-13, 1991. - 8

- A. Glindemann, S. Hippler, T. Berkefeld, and W. Hackenberg.

Adaptive optics on large telescopes.

Experimental Astronomy, 10(1):5-47, 2000. - 9

- R. B. Makidon, A. Sivaramakrishnan, M. D. Perrin, L. C. Roberts, Jr., B. R. Oppenheimer, R. Soummer, and J. R. Graham.

An analysis of fundamental waffle mode in early aeos adaptive optics images.

The Publications of the Astronomical Society of the Pacific, 117:831-846, August 2005. - 10

- R.K. Tyson.

Adaptive optics engineering handbook.

CRC Press, 2000.