Since I need to design a controller for the Adaptive optics, there is a topic about Kalman filtering and Optimal Control. Here is a small collection of helpful materials I have found so far. Introduction to Kalman filters is

here.

For an excellent web site, see Welch/Bishop's KF page.

Some tutorials, references, and research related to the Kalman filter.Kalman filter Toolbox can be reached

here.

MATLAB functions for ARMA

Maximum Likelihood EstimationThis section explains how the

garchfit estimation engine uses maximum likelihood to estimate the parameters needed to fit the specified models to a given univariate return series

Lots of MATLAB m-files for ARMA are in:

Discussions

Does anyone out there have a MATLAB code for fitting ARMA models (with

specified autoregressive order p and moving average order q) to time

series data?

>> help stats

Statistics Toolbox.

Version 3.0 (R12) 1-Sep-2000

see System Identification TB

Please see "ARMAX", and "PEM" in the System Identification toolbox.

For time series, the function "PRONY" in Signal Processing toolbox, and the

function "HPRONY" (which is based on PRONY) in the HOSA (Higher Order

Spectral Analysis) toolbox can also be used.

u=iddata(timeseries)

m = armax(u,[p q]) %ARMA(p,q)

result:

Discrete-time IDPOLY model: A(q)y(t) = C(q)e(t)

A(q) = 1 - 1.216 q^-1 + 0.7781 q^-2

C(q) = 1 - 1.362 q^-1 + 0.8845 q^-2 + 0.09506 q^-3

Estimated using ARMAX from data set z

Loss function 0.000768896 and FPE 0.00084009

Sampling interval: 1

Is there anyone have the idea how to use matlab code to fitting it?

Thanks.

ARIMA model can be created by using differenced data with ARMAX. For

example, use diff(x) rather than x as output data and then using ARMAX

command. A pole at 1 can be added to resulting model to achieve a true ARIMA

model.

m = armax(diff(x),[p,q])

m.a = conv(m.a,[1 -1]);

Also note that you can specify a differencing order using DIFF(X,ORDER) based on the trend (stationarity) observed in your data.

And remember too if you do forecasting this way that you have to integrate the resulting predicted time series by recursively summing the predicted values using the last observation value as your initial condition.

MATLAB has built in functions in its toolbox to estimate ARMA/GARCH

parameteres once a model is specified (garchfit). It also tells me that it is

using Maximum Likelihood Estimataion procedure, and it seems to do it

very fast. I am curious, if anybody knows such detail, whether it is

doing an MLE estimation with i.i.d. assumption (which is clearly

false), or does it actually do the recursive factorization of joint

pdf?

I think you should check the info pages on the Mathworks

website about the Econometrics Toolbox. You can download

pdf's with a lot of information about the toolbox and the

computational details. I was curious...it seems to be

a nonlinear programming approach with iterative

nature.

This section of the documentation explains the MLE calculation:

http://www.mathworks.com/help/toolbox/econ/f1-80052.htmlI am trying to use the System Identification Toolbox to determin the best ARMA or ARIMA model to use. I am getting errors from the arxstruc and selctruc. I also get errors if I specify the ARMA model with out a 1 for the MA order.

There are errors in the docs which say conflicting things about which models forms will work (e.g. "Note Use selstruc for single-output systems only. selstruc supports both single-input and multiple-input systems.")

AR(I)MA sytem identification is inherently difficult.

Don't expect anything such routine to work unconditionally.

I've been working in developing input/output ARMA model for surface roughness profile. I am using MATLAB armax command for that, which has a flexibility to choose my model's order. I was just wondering, is there any way I can plot the residuals, obtained from the ARMA model. My intension is to verify the adequacy of the developed model, that's why thinking to figure out the aucorrelation function of residuals. But I'm really confused regarding how to do that.

obtain the numerator and denominator of the ARMA model (should be in the ARMA model structure), then use filter() with these parameters along with the ARMA system input as inputs. Take the output from filter() and compare it to the original output to see residuals and all that.

Thank you very much for your suggestion. The thing is MATLAB returns ARMA model as an IDPOLY output. For an instance, for my case the intended structure is like,

Discrete-time IDPOLY model:

A(q)y(t) = B(q)u(t) + C(q)e(t)

where, y(t)=> output, u(t)=> input

And

A(q) = 1 - 0.8356 q^-1 + 0.1549 q^-2

B(q) = 0.08899 q^-2 + 0.08928 q^-3

C(q) = 1 + 0.2435 q^-1 + 0.3926 q^-2

For this kind of model, what should be the numerator and denominator? It would be great, if you state a bit more explicitly.

Hi Hafiz,

As a difference equation your model is:

y(t)=0.8356*y(t-1)-0.1549*y(t-2)+0.08899*u(t-2)+0.08928*u(t-3)+e(t)+0.2435*e(t-1)+0.3926*e(t-2)

y(t) is the output, u(t) is the exogenous term, and e(t) is the white noise disturbance. In this model, you are writing the output as a sum of two convolutions, the first one being a convolution of the input, u(t), with the impulse response, and the 2nd convolution being a filtering of the disturbance, or noise term, e(t). Hence, the transfer function is the ratio of:

(0.08899*z^{-2}+0.08928*z^{-3})/(1-0.8356*z^{-1}+0.1549*z^{-2})

To view:

fvtool([0 0 0.08899 0.08928],[1 -0.8356 0.1549])

Hope that helps.

Look up functions RESID, COMPARE, PREDICT and PE (in System Identification

Toolbox). In particular, RESID will plot the autocorrelation of residuals.

I need something to know about 'resid' command. I'm using it like this:

d= resid (arma_model, DAT, 'corr');

plot (d);

The plots return the autocorrelation of error and cross correlation between the error and the input. I'm wondering is there any way I can plot the autocorrelation of the residuals in normalized form (in a scale of y between 0 to 1)?

I'm having some issues with the armax function. I have an single input-output time series dataset, and all I want to do is extimate the parameters of an arma(2,3) model, without the exogenous term. How do I go about this?

Hi Brian,

You can do

% creating some model data ARMA(1,2)

A=[1 -0.9];

B = [ 1 0.2000 0.4000];

reset(RandStream.getDefaultStream);

y = filter(B,A,randn(1000,1));

Data = iddata(y,[],1); % no exogenous term

Model =armax(Data,[1 2]);

% displaying Model

Model

Discrete-time IDPOLY model: A(q)y(t) = C(q)e(t)

A(q) = 1 - 0.9012 q^-1

C(q) = 1 + 0.2259 q^-1 + 0.4615 q^-2

Estimated using ARMAX on data set Data

Loss function 0.993907 and FPE 0.999895

Sampling interval: 1

Compare A(q) to A and C(q) to B

Hope that helps.

MATLAB software about ARMA modellingARMA PARAMETER ESTIMATION

This function provides an ARMA spectral estimate which is maximum entropy satisfying correlation constraint (number of poles)and cepstrum

constrains (number of ceros)

The function requires 3 inputs: Input signal, Order of denonominator, Order of Numerator and the output variable are: numerator coeffients, denominator coeficients and square-root of input noise power.

by Miguel Angel Lagunas Hernandez

Estimating the parameters of AR-MA sequences is fundamental. Here we present another method for ARM. Download Now

Automatic Spectral Analysis

by Stijn de Waele

03 Jul 2003 (Updated 09 May 2005)

No BSD License

Automatic spectral analysis for irregular sampling/missing data, analysis of spectral subband.

Download NowAccurate estimates of the autocorrelation or power spectrum can be obtained with a parametric model (AR, MA or ARMA). With automatic inference, not only the model parameters but also the model structure are determined from the data. It is assumed that the ARMASA toolbox is present. This toolbox can be downloaded from the MATLAB Central file exchange at www.mathworks.com

The applications of this toolbox are:

- Reduced statistics ARMAsel: A compact yet accurate ARMA model is obtained based on a given power spectrum. Can be used for generation of colored noise with a prescribed spectrum.

- ARfil algorithm: The analysis of missing data/irregularly sampled signals

- Subband analysis: Accurate analysis of a part of the power spectrum

- Vector Autoregressive modeling: The automatic analysis of auto- and crosscorrelations and spectra

- Detection: Generally applicable test statistic to determine whether two signals have been generated by the same process or not. Based on the Kullback-Leibler index or Likelihood Ratio.

- Analysis of segments of data, possibly of unequal length.

For background information see my PhD thesis, available at http://www.dcsc.tudelft.nl/Research/PubSSC/thesis_sdewaele.html

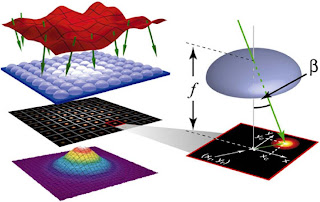

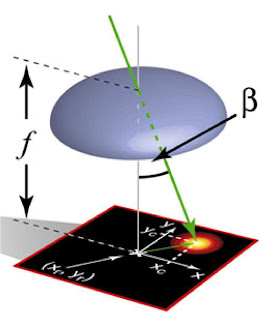

where $L_x$ is a size of the photosensor's pixel and $f$ is the focus length of the lenslet.

where $L_x$ is a size of the photosensor's pixel and $f$ is the focus length of the lenslet.