The Problem: shooting with exposure times more that 30 seconds requires bulb, but we want to automate shooting process.

The Solution: using some common chips and bash script in Linux, we can make a PC-driven remote control device for Canon's digital camera.

What we have

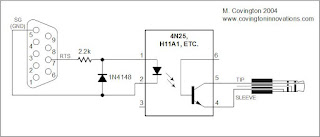

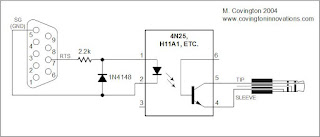

We have the Canon EOS 400D digital camera, a Debian-powered notebook, and necessity of shooting pictures with exposure time longer than 30 seconds. There is good scheme proposed by Michael A. Covington

here. Anyway, I'm mirroring it here:

This is a pretty good scheme, but it doesn't work for my Canon EOS 400D: shutter lifting up but not going down.

After personal communications with Michael, I suspect the reason of this problem is in version of the firmware in my camera. Anyway, we have found the solution of the problem.

We have played around a bit and found the solution.

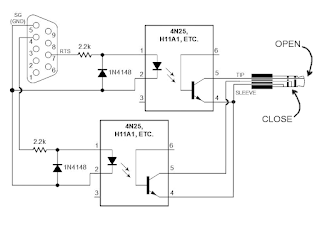

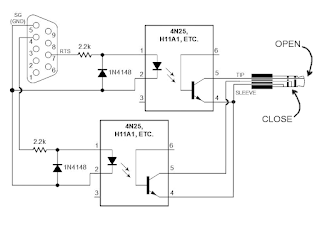

Scheme for Canon EOS 400D

After some cut-and-try iterations, we (I am and my colleague Alexey Ropyanoi) have found out why proposed scheme did not work. Now we proposing the following scheme:

We used one more cascade to control third tip, and it works! Our laboratory Canon EOS 400D now opening and closing shutter by command from computer.

Necessary electric components

To create such remote shooting wire, you need a 4-wire cable (from audio devices or telephone cable), 2.5mm jack (or 3/32 inch jack), electrics chips mentioned above, 9-pin COM-port, and USB-COM adapter (for using this remote shooting wire on novel computers).

The best USB-COM adapter is

Profilic 2303 chip: it is the most common chip and it works in Linux "out of the box".

Software

A little program on C,

setSerialSignal, is required for remote control of the camera. Source code is

here and it can be compiled with GCC:

gcc -o setSerialSignal setSerialSignal.c

Works on Debian GNU/Linux v4.0 r.0 "Etch", gcc version 4.1.2 20061115 (prerelease) (Debian 4.1.1-21).

This is the code:

/*

* setSerialSignal v0.1 9/13/01

* www.embeddedlinuxinterfacing.com

*

*

* The original location of this source is

* http://www.embeddedlinuxinterfacing.com/chapters/06/setSerialSignal.c

*

* This program is free software; you can redistribute it and/or modify

* it under the terms of the GNU Library General Public License as

* published by the Free Software Foundation; either version 2 of the

* License, or (at your option) any later version.

*

* This program is distributed in the hope that it will be useful, but

* WITHOUT ANY WARRANTY; without even the implied warranty of

* MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

* Library General Public License for more details.

*

* You should have received a copy of the GNU Library General Public

* License along with this program; if not, write to the

* Free Software Foundation, Inc.,

* 59 Temple Place, Suite 330, Boston, MA 02111-1307 USA

*/

/* setSerialSignal

* setSerialSignal sets the DTR and RTS serial port control signals.

* This program queries the serial port status then sets or clears

* the DTR or RTS bits based on user supplied command line setting.

*

* setSerialSignal clears the HUPCL bit. With the HUPCL bit set,

* when you close the serial port, the Linux serial port driver

* will drop DTR (assertion level 1, negative RS-232 voltage). By

* clearing the HUPCL bit, the serial port driver leaves the

* assertion level of DTR alone when the port is closed.

*/

/*

gcc -o setSerialSignal setSerialSignal.c

*/

#include

#include

#include

/* we need a termios structure to clear the HUPCL bit */

struct termios tio;

int main(int argc, char *argv[])

{

int fd;

int status;

if (argc != 4)

{

printf("Usage: setSerialSignal port DTR RTS\n");

printf("Usage: setSerialSignal /dev/ttyS0|/dev/ttyS1 0|1 0|1\n");

exit( 1 );

}

if ((fd = open(argv[1],O_RDWR)) < 0)

{

printf("Couldn't open %s\n",argv[1]);

exit(1);

}

tcgetattr(fd, &tio); /* get the termio information */

tio.c_cflag &= ~HUPCL; /* clear the HUPCL bit */

tcsetattr(fd, TCSANOW, &tio); /* set the termio information */

ioctl(fd, TIOCMGET, &status); /* get the serial port status */

if ( argv[2][0] == '1' ) /* set the DTR line */

status &= ~TIOCM_DTR;

else

status |= TIOCM_DTR;

if ( argv[3][0] == '1' ) /* set the RTS line */

status &= ~TIOCM_RTS;

else

status |= TIOCM_RTS;

ioctl(fd, TIOCMSET, &status); /* set the serial port status */

close(fd); /* close the device file */

}

Sending signals

Compile the program

setSerialSignal and make it executable. Below listed signals are described to open and close the shutter

:

DTR

setSerialSignal /dev/ttyS0 1 0Clear DTR

setSerialSignal /dev/ttyS0 0 0

RTS

setSerialSignal /dev/ttyS0 0 1

Clear RTS

setSerialSignal /dev/ttyS0 1 1

Shutter opens at DTR and closes at RTS.

Shell script for remote shooting

To automate the process of taking pictures, it is suitable to use the bash script written by Eugeni Romas aka BrainBug. Here is the modified code:

#!/bin/bash

for i in `seq $3`; do

{

setSerialSignal /dev/ttyUSB0 0 0 &&

sleep $1 && setSerialSignal /dev/ttyUSB0 0 1 &&

sleep 0.3 && setSerialSignal /dev/ttyUSB0 0 0 &&

sleep $2 && setSerialSignal /dev/ttyUSB0 1 1 && echo "One more image captured!" &&

sleep $4;

}

done

echo "Done!"

Script parameters:

1: shutter opening delay

2: exposure time, in seconds

3: amount of shots

4: delay between shots

Example:

make_captures 4 60 30 2

Script can work with USB-COM adaptor and you need to edit it if you have different port.

How does it work

Remote shooting wire is ready, inserting USB-COM adapter with wire and next:

- Turn on the camera, set BULB mode, set aperture size and ISO speed.

- Insert jack in the camera, another end of the wire insert in COM-USB adapter.

- Look at dmesg log files: kernel must recognize chip and write something like this:

usb 2-1: new full speed USB device using uhci_hcd and address 2

usb 2-1: configuration #1 chosen from 1 choice

drivers/usb/serial/usb-serial.c: USB Serial support registered for pl2303

pl2303 2-1:1.0: pl2303 converter detected

usb 2-1: pl2303 converter now attached to ttyUSB0

usbcore: registered new interface driver pl2303

drivers/usb/serial/pl2303.c: Prolific PL2303 USB to serial adaptor driver

- Now you can take pictures:

make_capture 1 5 2 3

Here we making 2 images with 5 second exposure, the delay between shots is 3 seconds, the delay for shutter's lift 1 second.

Acknowledgements

I would like to express my gratitude to:

- Michael A. Covington for his original article "Building a Cable Release and Serial-Port Cable for the Canon EOS 300D Digital Rebel".

- Eugeni Romas aka BrainBug for link to the original post and discussion.

- Anton aka NTRNO for searching key posts at Astrophorum.

- Alexey Ropjanoi, who experimentally found out problem and eliminated it, proposing new scheme for shooting.

And I deeply thankful to my colleagues of the Solid State Physics department, Moscow Engineer Physics Institute, Russia.