Optimization problems in LaTeX

In optimization we want to minimize or maximize an objective function over a set of decision variables sbject to constraints. There are different ways to format optimization problems, but the preferable way is to follow an excellent book “Convex Optimization” by Stephen Boyd and Lieven Vandenberghe. A general optimization problem has the form:

The LaTeX code for this formulae is the following:

\begin{equation*}

\begin{aligned}

& \underset{x}{\text{minimize}}

& & f_0(x) \\

& \text{subject to}

& & f_i(x) \leq b_i, \; i = 1, \ldots, m.

\end{aligned}

\end{equation*}

If we want to perform quadratic programming, the notation will be the following:

The LaTeX code for it is:

\begin{equation*}

\begin{aligned}

& \underset{x}{\text{minimize}}

& & J(x) = \frac{1}{2} \mathbf{x}^T Q \mathbf{x} + c^T \mathbf{x} \\

& \text{subject to}

& & A\mathbf{x} \leq \mathbf b \mbox{ (inequality constraint)} \\

&

& & E\mathbf{x} = \mathbf d \mbox{ (equality constraint)}

\end{aligned}

\end{equation*}

Unlike the tabular environment, in which you can specify the alignment of each column, in the aligned environment, each column (separated by &) has a default alignment, which alternates between right and left-aligned. Therefore, all the odd columns are right-aligned and all the even columns are left-aligned.Another example of the optimization problem:

and the LaTeX code:

\begin{equation*}

\begin{aligned}

& \underset{X}{\text{minimize}}

& & \mathrm{trace}(X) \\

& \text{subject to}

& & X_{ij} = M_{ij}, \; (i,j) \in \Omega, \\

&&& X \succeq 0.

\end{aligned}

\end{equation*}

Here $X \succeq 0$ means that the matrix $X$ is positive semidefinite.

This LaTeX recepe was found here.

\begin{equation}

\arg\max_{x}

\end{equation}

which will produce the folowing:

However, you might prefer a different way that centers x in arg max expression using \underset command:

\begin{equation}

\underset{x}{\arg\max}

\end{equation}

and the result will be:

In order to use the command, you need to add the amsmath package in the preambule:

\usepackage{amssymb,amsmath,amsfonts,latexsym,mathtext}

This idea was found here.

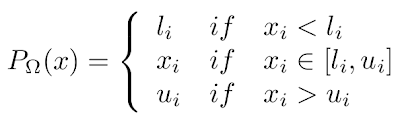

How to add single-sided brackets in latex?

This is some kind of dirty LaTeX hack but it works nicely:

\begin{equation}

P_\Omega(x) = \left\{\begin{array}{lll}

l_i & if & x_i<l_i\\

x_i & if & x_i\in[l_i,u_i]\\

u_i & if & x_i>u_i

\end{array}\right.

\end{equation}

and it generates what we want:

Look closely for the right. statement - the dot at the end is important! Note that to produce the single-sided brackets, you need to add empty \left. or \right. brackets.

The trick has been taken from here: how to add single-sided brackets in latex